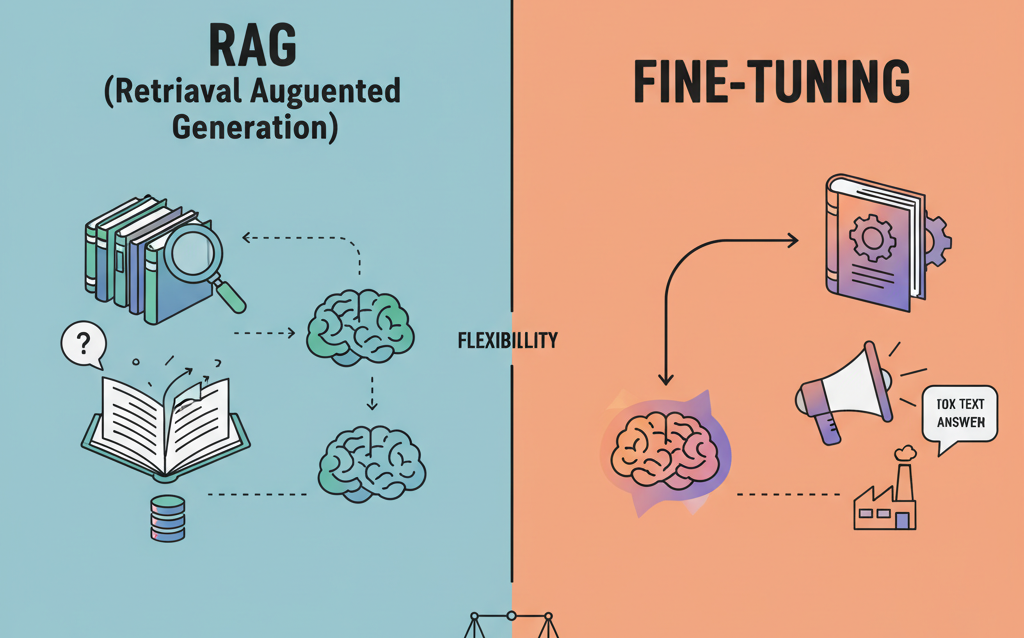

Introduction to RAG vs Fine-Tuning

Every business adopting AI eventually faces one critical question-

Should we fine-tune a model or build with Retrieval-Augmented Generation (RAG) (RAG vs Fine-Tuning)?

Both techniques promise customization—but each serves different goals. At AiBridze Technologies, we’ve implemented both across finance, healthcare, and property sectors.

(Learn more about Retrieval-Augmented Generation (RAG) in Google’s research overview.) The right choice often defines your project’s success.

Understanding the Two Approaches

What Is Fine-Tuning?

Fine-tuning teaches an existing large language model (LLM) to behave like an expert in your domain.

It’s like training an employee who already knows English — now they must learn your company’s language.

It modifies model weights using your data so the model reflects your tone, terminology, and logic.

Best for-

- Highly specialized tasks (medical summaries, legal drafting)

- Stable datasets that rarely change

- Offline or private-deployment models

💡 Example-

A financial reporting bot fine-tuned on thousands of audited statements consistently produces compliant, regulation-ready reports.

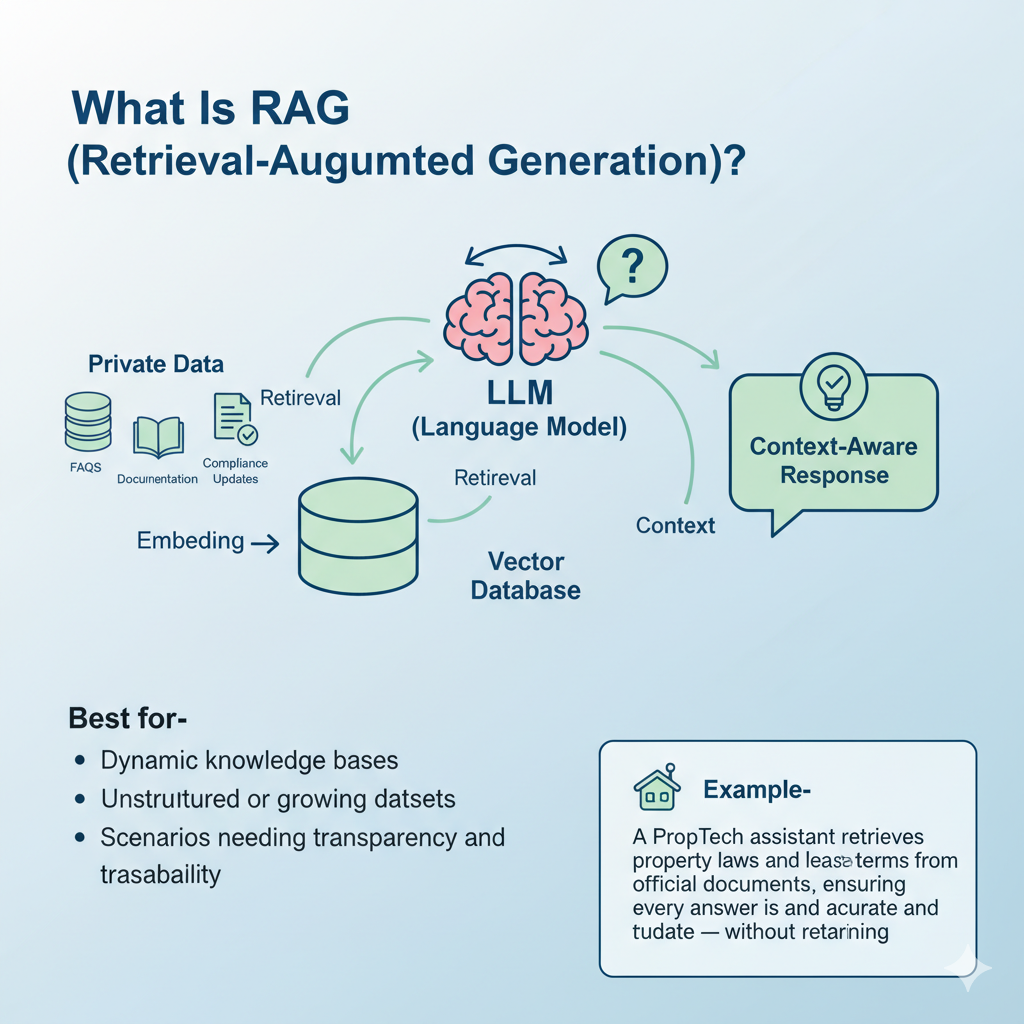

What Is RAG (Retrieval-Augmented Generation)?

RAG connects an LLM to your private data using a vector database. Instead of memorizing everything, it “retrieves” the most relevant snippets at runtime and then generates context-aware responses.

Best for-

- Dynamic knowledge bases (FAQs, documentation, compliance updates)

- Unstructured or growing datasets

- Scenarios needing transparency and traceability

💡 Example-

A PropTech assistant retrieves property laws and lease terms from official documents, ensuring every answer is accurate and up to date — without retraining.

RAG vs Fine-Tuning — Key Comparison

| Aspect | Retrieval-Augmented Generation (RAG) | Fine-Tuning |

| Data Location | External (Vector DB) | Internal (Model Weights) |

| Update Speed | Instant – upload new docs | Requires retraining |

| Security | Data remains on-premises | Data exposed during training |

| Cost | Lower setup & maintenance | High GPU and compute cost |

| Accuracy | Depends on retrieval quality | Depends on training data |

| Ideal Use Cases | Dynamic knowledge bases | Deep domain expertise |

When to Choose Fine-Tuning

Fine-tuning excels when your data is structured, repetitive, and domain-specific.

- You own clean, labeled datasets.

- You need consistent tone & reasoning.

- You’re deploying models in controlled environments.

💡 Example-

A healthcare organization fine-tunes an LLM to interpret radiology reports in clinical language — reducing errors and standardizing terminology.

When to Choose RAG

RAG is ideal when content evolves frequently or answers must be verifiable.

- Frequent updates (knowledge bases, manuals, policies)

- Transparent answers with document citations

- Quick deployment without retraining cycles

💡 Example-

A global enterprise uses AiBridze’s RAG framework for multilingual compliance search.

Employees ask “What’s the latest UAE PDPL rule for HR data?” and receive cited, policy-based answers instantly.

Hybrid Strategy- The Best of Both Worlds

In enterprise systems, RAG + Fine-Tuning deliver maximum value.

- Fine-tune for style, tone, and reasoning logic.

- Use RAG for real-time facts and context.

This hybrid architecture combines deep expertise with live data—an approach AiBridze uses across industries to build adaptive AI assistants.

AiBridze Implementation Example

Use Case — Internal Knowledge Assistant for a Logistics Enterprise

Challenge-

The client’s ERP had 1400 tables. Employees couldn’t run plain-English queries or access the latest process changes.

AiBridze Solution-

- Fine-tuned a compact LLM to understand ERP schema & query syntax.

- Integrated RAG to fetch live data and business rules.

- Added a chat interface for natural queries like

“Show pending invoices by vendor last month.”

Results-

- 90% reduction in SQL dependency

- Instant answers with verified sources

- Secure on-prem deployment with zero data leak risk

Business Benefits

- Speed & Agility – Update data instantly without retraining.

- Security & Compliance – Data stays within enterprise infrastructure.

- Scalability – Adapts from SMBs to global enterprises.

- Accuracy & Transparency – Citations prevent AI hallucinations.

- Cost Efficiency – RAG deployments cost 60–70% less than large-scale fine-tuning.

How AiBridze Helps

We start with a Data Feasibility and Architecture Review to select the best approach for your business.

Our team handles everything end to end-

- Custom LLM Development (OpenAI, Llama, Mistral, Falcon)

- Vector Database Setup (Qdrant, Pinecone, FAISS)

- Prompt Orchestration & Evaluation

- Secure API Integrations & Monitoring

- Performance Optimization & Governance

Conclusion

Whether you’re training a domain-specific model or launching an enterprise chatbot, the right choice between RAG and Fine-Tuning defines your success.

At AiBridze, we help organizations combine accuracy, scalability, and security through tailored AI architectures that fit your goals and budget.

Call to Action

Ready to make your AI truly yours?

Discover how AiBridze can customize an LLM solution for your business.

👉 Contact Us Today to start your AI journey.